Keyword [FlowNet2.0] [FlyingThings3D]

Ilg E, Mayer N, Saikia T, et al. Flownet 2.0: Evolution of optical flow estimation with deep networks[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. 2017: 2462-2470.

1. Overview

In this paper, it proposes FlowNet2.0

1) Marginal slower than FlowNet

2) Decreases the estimation error by more than 50%

3) Faster variants. 140 fps with accuracy matching FlowNet

1.1. Contribution

1) Show that the schedule of presenting data during training is very important

2) Stacked architecture with warping operation

3) Subnetwork specializing on small motions and create a special dataset ChairsSDHom.

1.2. Dataset

1) FlyingThings3D

2) FlyingChairs

2. Dataset Schedules

1) Best performance is trained on Chairs, then fine-tuned on Things3D

2) FlowNetC outperforms FlowNetS

3. Architecture

3.1. Stacking Two Networks for Flow Refinement

1) Stacking without warping yields better results on Chairs, but worse on Sintel. (over-fitting)

2) Stacking with warping always improve results.

3) adding intermediate loss is helpful, when training the stacked network end-to-end.

4) Best performance is training network one-by-one.

3.2. Stacking Multiple Diverse Networks

Applying a network with the same weights multiple times and also fine-tuning this recurrent part does not improve results.

3.3. Small Displacement Network and Fusion

1) fine-tune the whole FlowNet2-CSS for smaller displacement on a mixture of Things3D and ChairSDHom, denote as FlowNet2-CSS-ft-sd.

2) FlowNet2-SD. modify FlowNetS (remove stride 2 in first layer, …)

3) small network to fuse FlowNet2-CSS-ft-sd and FlowNet2-SD, with full resolution output.

The final network is denoted as FLowNet2

4. Experiments

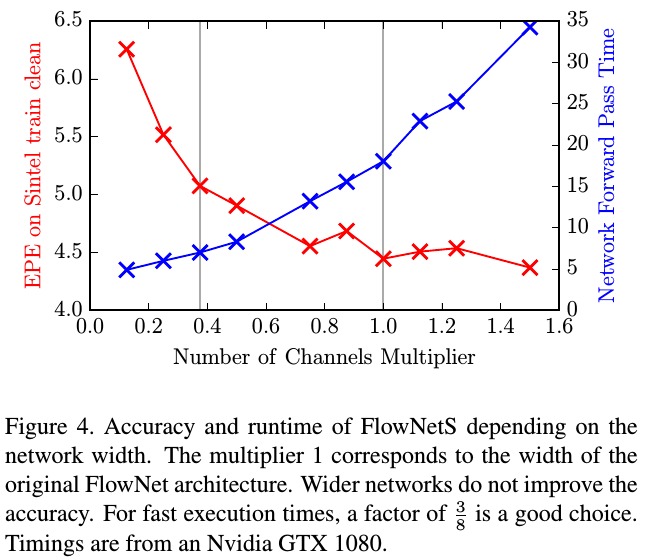

4.1. Runtime

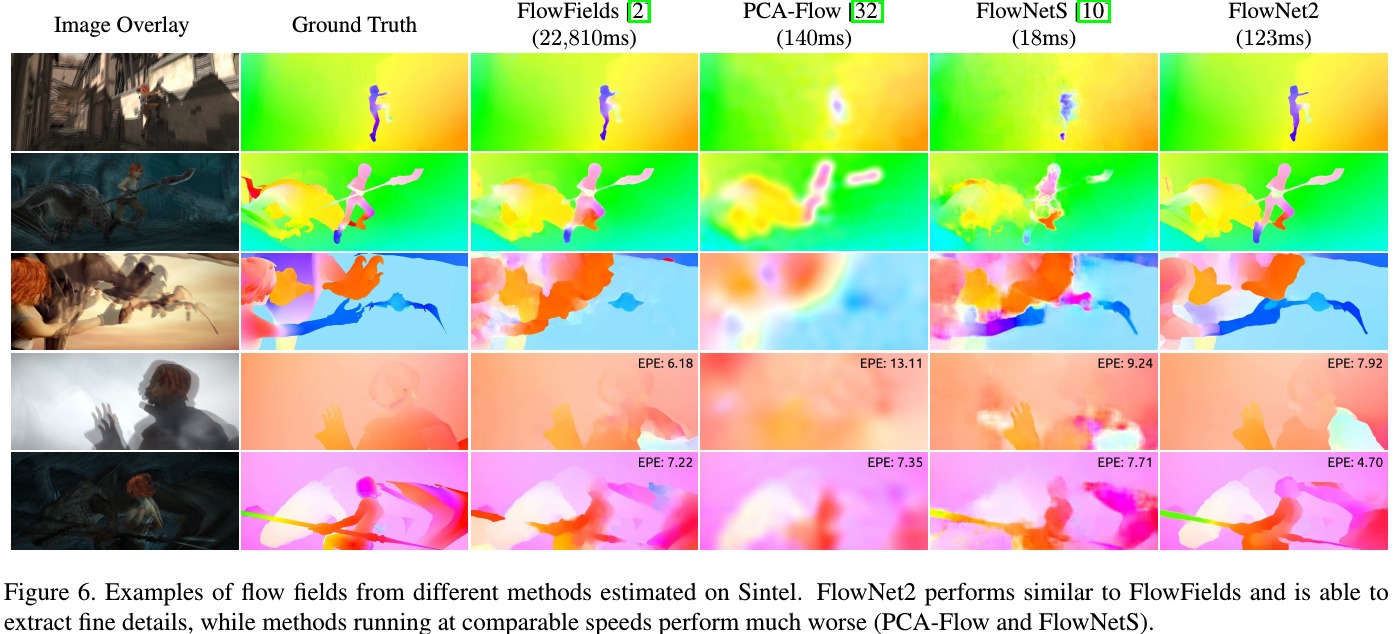

4.2. Visualization